The generic Prompt Engineer role is dead.

It’s December 2025. The timeline is critical.

If your goal is to land a high-leverage remote Prompt Engineering job in 2026, abandon the entry-level mindset now. Immediately.

The market matured faster than experts predicted. Companies,especially those focused on automated lead generation (our core business),are not hiring for simple prompt optimization anymore.

They demand measurable revenue impact. They require specialized technical depth.

This guide cuts the noise. We analyzed thousands of job postings, benchmarked salary data, and mapped competitive gaps. This is the precise blueprint for maximizing your remote earning potential in 2026.

✅ Key Takeaways for 2026

- Salary Projection: Expect the US national average for mid-level specialists to climb past $100,000. This increase is driven purely by specialization and demonstrable ROI.

- Skill Shift: Foundational RAG and Python are now entry-level filters. The high-value skills are Prompt Version Control, LLMOps Integration, and Agentic Workflow Design. These skills equal revenue growth.

- Remote Arbitrage: Remote pay tiers are solidifying. US High COL roles pay 40–70% more than Global Contract roles for the same technical output. Choose your target market strategically.

- The New Interview: Expect mandatory “Prompt-Off” technical challenges. Interviews will focus on whiteboarding sessions centered on RAG system design and cost optimization.

Section 1: The Definitive 2026 Prompt Engineer Salary Breakdown

Competitors are still tracking 2025 averages. That data is already stale.

We don’t rely on lagging indicators. We operate on predictive analysis: The specialized Prompt Engineering market shows a 32.8% Compound Annual Growth Rate (CAGR).

This explosive growth confirms intense, specialized demand. It pushes compensation far beyond the reported $73,935 average seen in early 2025.

The future demands specialized compensation.

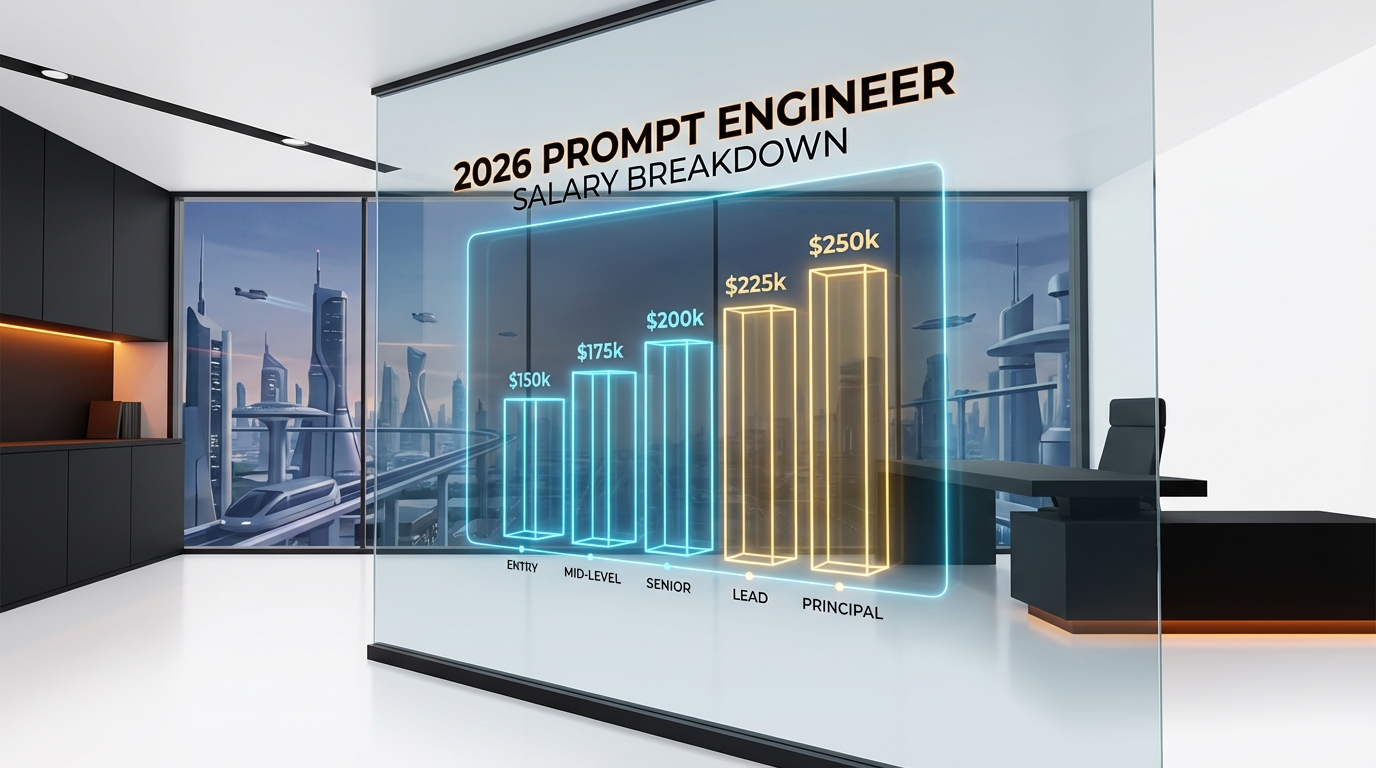

1.1: The 2026 Salary Projection by Seniority

We project a minimum 15–20% compensation increase across the board. This is strictly for roles demanding advanced, revenue-driving skills (the specialization we outline in Section 2).

If you are only “writing prompts,” your salary ceiling is fixed,and low.

If you are designing, implementing, and optimizing complex Retrieval-Augmented Generation (RAG) pipelines for organic lead generation (our core focus), your ceiling is virtually unlimited. This is the quantifiable difference in value.

| Role Tier | 2025 US Average Baseline | 2026 Projected Salary Range (Remote) | Key Responsibility Shift (ROI Focus) |

|---|---|---|---|

| Junior/Entry Level | $55,000 – $75,000 | $65,000 – $85,000 | Basic prompt iteration, content generation QA, data labeling. |

| Mid-Level Specialist | $75,000 – $110,000 | $100,000 – $135,000 | RAG pipeline optimization, structured output (JSON) enforcement for APIs, prompt versioning for consistent lead quality. |

| Senior/Lead Architect | $120,000 – $185,000 | $160,000 – $250,000+ | LLMOps integration, Agentic design (full automation), prompt security (Red Teaming), API cost optimization (direct P&L impact). |

1.2: The Remote Salary Arbitrage Model (2026)

The term “Remote” is no longer a monolith.

Companies are ruthlessly applying geographic adjustments. This applies even to high-value, US-based talent.

Founders: You must understand this precise tiering. It dictates your viable talent pool, your budget allocation, and ultimately, your project ROI.

- Tier 1: High Cost of Living (HCOL) Remote: San Francisco, NYC, Seattle. These roles pay 100% of the local salary benchmark. They demand demonstrable, immediate ROI: Zero learning curve, immediate execution.

- Tier 2: National Remote: Roles paying a blended average, typically 85-95% of the HCOL rate. This is the sweet spot for specialized talent living in lower-cost US regions. High quality, slightly optimized compensation.

- Tier 3: Global/Contract Remote: These roles pay significantly less, often 40-60% of US national rates. They are typically contract or freelance positions, focusing purely on volume output. If you are looking to scale high-ticket income, focus on specialization (Tier 1/2), not low-cost arbitrage.

“The core value proposition of prompt engineering in 2026 is cost savings and reliability. We don’t pay you to be creative; we pay you to engineer reliable, predictable, and scalable output systems that reduce our compute costs and ensure lead quality.”

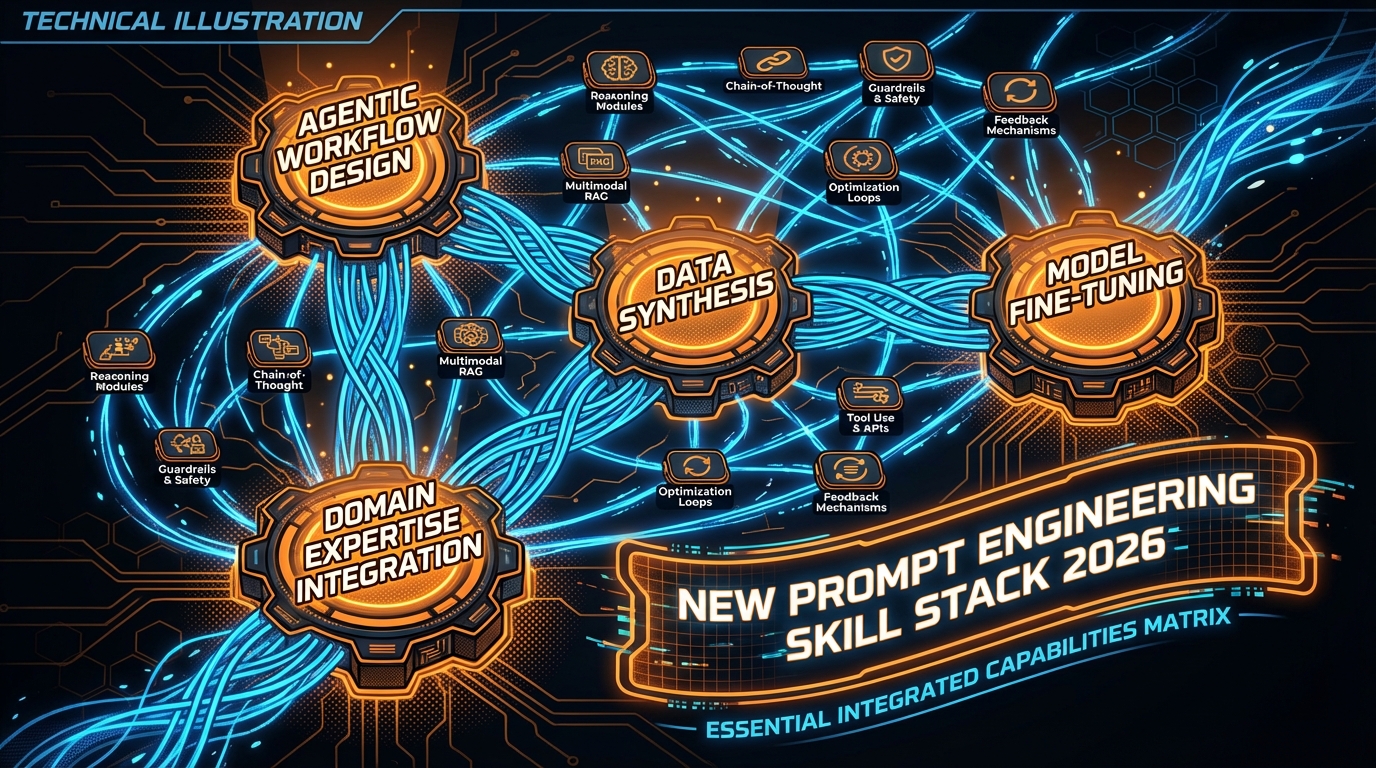

Section 2: The New Prompt Engineering Skill Stack (2026)

If your resume stops at RAG and basic Python, you are competing against the bottom 90% of applicants.

We need to move past fundamentals. Now.

High-leverage roles demand operationalizing AI at scale. This means direct integration into our core lead generation and conversion funnels.

2.1: Mandatory Technical Skills for Seniority

These are the skills that differentiate an $80k job from a $180k job:

- Prompt Version Control & Testing: Treat prompts as production-level code. You must use robust frameworks (e.g., PromptLayer, internal systems) to A/B test variations, track performance, and guarantee stable, measurable output. No disciplined engineering approach here means no seniority.

- LLMOps Integration: Prompt design directly dictates system reliability. You must understand latency, token usage, and monitoring protocols. We require predictable, low-latency output for our automated SDR workflows. That is non-negotiable.

- Advanced Retrieval Techniques: Simple RAG is dead. Mandate expertise in hybrid search (vector + keyword) and re-ranking models (e.g., Cohere). Optimizing chunking strategies guarantees retrieval of the exact client data needed for high-conversion personalized outreach.

- Agentic Design Patterns: This is the immediate future of automation. Design complex, multi-step workflows where the model autonomously uses tools. Example: Calling our internal email API after researching a client’s specific tech stack and identifying the decision-maker.

2.2: The Rise of Prompt Security (Red Teaming)

We are integrating LLMs directly into core lead generation and client communication systems. Security risks are skyrocketing.

Prompt injection attacks,where malicious users hijack instructions,are a massive liability for our brand trust and revenue streams.

This creates a high-paying specialization: Prompt Security Engineer.

Your job is not to write good prompts. Your job is to break the system. Identify vulnerabilities, red team the workflows, and design robust guardrails.

This role commands top-tier salaries because it directly mitigates significant legal, financial, and reputational risk. It protects our operation.

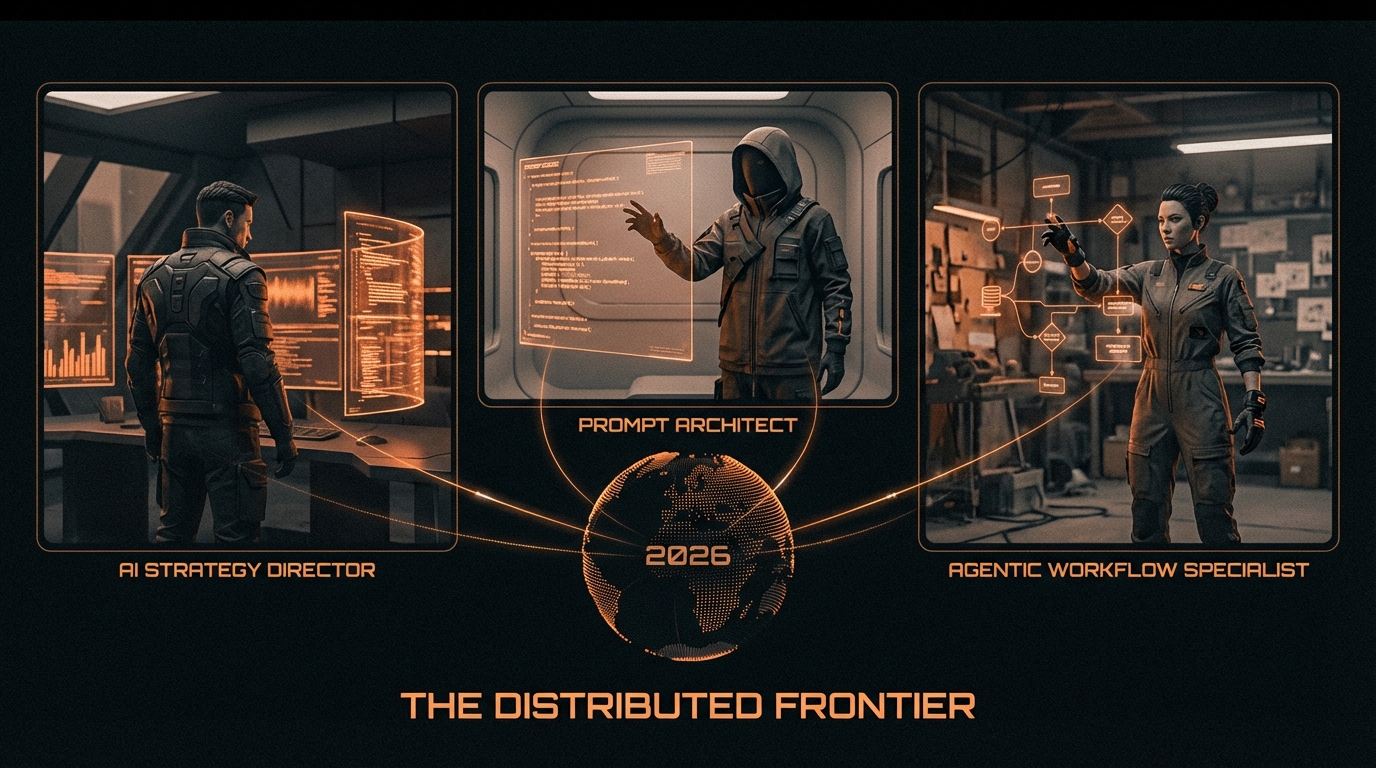

Section 3: Hottest Remote Prompt Engineering Roles & Archetypes (2026)

Stop wasting time searching for the generic title: “Prompt Engineer.”

That title is dead. We need high-leverage specialization tied directly to business automation and revenue generation.

These are the three highly paid archetypes dominating the remote market in 2026:

3.1: The Agentic Workflow Designer

This role orchestrates autonomous AI agents. They execute complex, multi-step tasks,like full lead qualification and automated email drafting systems. This is how we scale our outreach.

- Focus: Designing the flow (using LangChain, AutoGen, or internal frameworks). The LLM must use tools, access our CRM, and make dynamic decisions based on live client data.

- Value: Direct automation of core sales and marketing processes. This role replaces entire manual SDR/BDR workflows, driving massive, measurable efficiency gains (i.e., lower cost per qualified lead).

- Hiring Companies: SaaS platforms, large enterprise sales operations, and specialized innovation labs focused on conversion rate optimization.

3.2: The Data-Driven Alignment Specialist

This specialist is our quality control layer. Their mandate: guarantee brand voice consistency and factual accuracy across every automated client interaction.

You cannot scale lead generation if your AI output is inconsistent or off-brand.

- Focus: Creating high-quality evaluation datasets (Evals) and Golden Prompts. They measure model drift and track RAG retrieval effectiveness over time (i.e., is the AI still accurate?).

- Value: Ensures AI-generated outreach consistently meets strict brand guidelines and compliance benchmarks. This is a critical trust function necessary for high-volume B2B communication.

- Required Tools: Monitoring platforms (e.g., Weights & Biases), vector databases (Pinecone, Qdrant), and statistical analysis for actionable error rate tracking.

3.3: The Product Prompt Manager

This is the highest-leverage, non-coding role available. You own the prompt roadmap. You interface directly with sales leadership and product management.

The prompt is now a core business asset. You treat it as such.

- Focus: Defining user experience through AI interactions. Translating strict sales goals and product requirements into prompt specifications, specifically focusing on user journeys and measurable conversion metrics.

- Value: Directly ties prompt performance to critical product KPIs (e.g., feature adoption, retention, lead qualification rate). This role dictates revenue outcomes.

- Skills: Mastery of product management methodology (Agile/Scrum), elite communication skills, and the ability to articulate technical limitations to non-technical executive stakeholders.

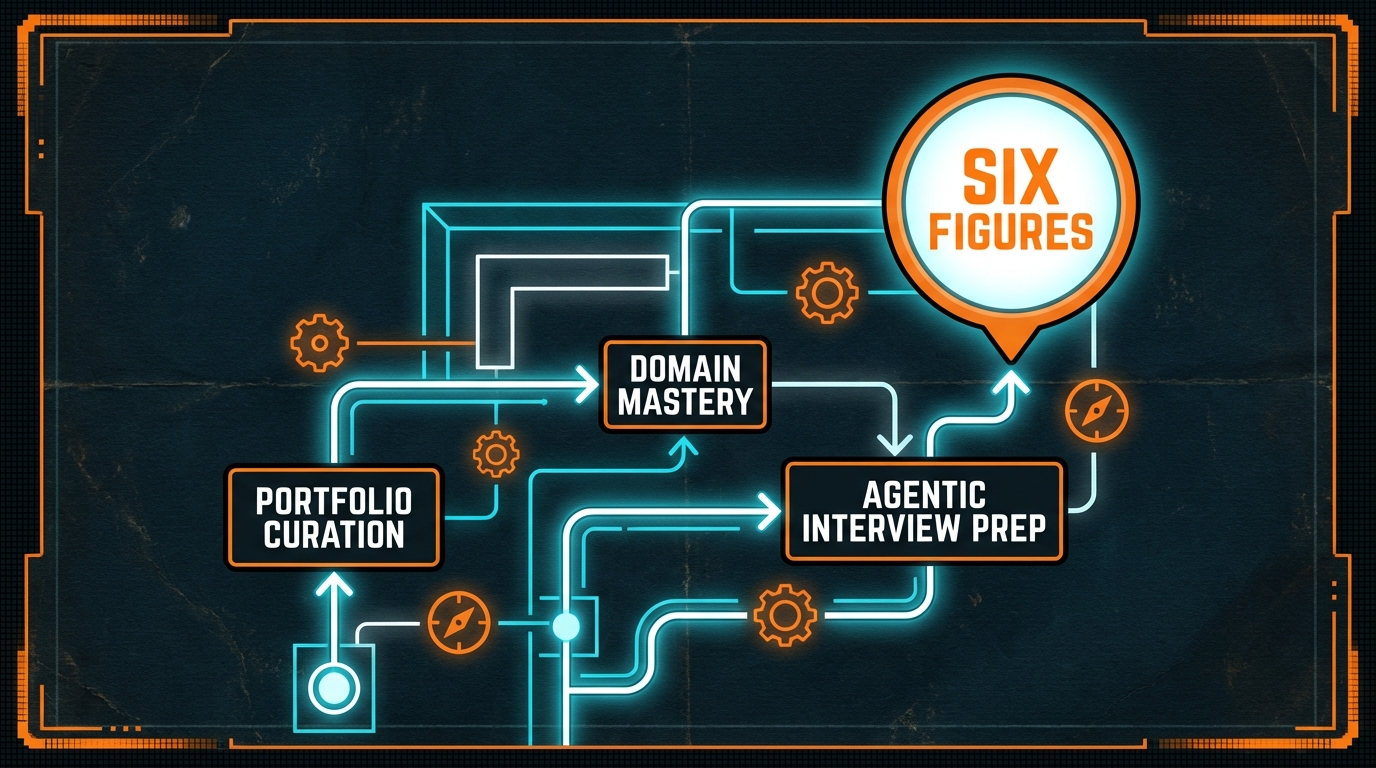

Section 4: The 2026 Blueprint for Getting Hired (Six Figures or Nothing)

The interview process has evolved drastically. Generic portfolios showing off “creative writing” prompts are worthless. They will not secure a job paying over $100k.

We need a systematic, results-oriented approach. This is the blueprint for securing high-leverage remote roles.

4.1: The Portfolio of Measurable Impact

Your portfolio is not a gallery of outputs. It is a financial report.

It must demonstrate tangible ROI: revenue increase, cost reduction, or risk mitigation. Always quantify your results. Never use vague language.

Showcase projects that hit these three requirements:

- Quantify Performance: Stop showing the prompt and the output. Show the evaluation metric instead. Example: “Reduced factual error rate by 45% using hybrid search RAG pipeline for internal sales docs.” That is the language of business.

- Demonstrate Version Control: Use GitHub. Show the iteration history of a single complex prompt sequence. Explain precisely why version 3.1 outperformed 2.0 (e.g., reduced latency by 1.2s; cut token cost by 18%).

- Solve a Business Problem: Focus strictly on real-world applications. Think automated sales email drafting, hyper-personalized outreach generation, or internal knowledge retrieval systems for SDRs.

4.2: Navigating the 2026 Technical Interview

Forget basic behavioral questions. That era is dead.

You must expect high-pressure, scenario-based technical challenges. We are testing for engineering discipline, not theoretical knowledge.

Step #1: The Prompt-Off Challenge

The first test is immediate execution. You will face a specific, ambiguous task,a scenario pulled directly from our revenue workflow.

Example: “Summarize the last 10 quarterly earnings reports into a 5-point SWOT analysis for a new investor.”

You get 30 minutes to iterate and refine a prompt using a specific LLM.

Goal Check: Your goal is reliability, not speed. You must explain your reasoning for every single token used (system prompt, few-shot examples, delimiters). We are testing your repeatable process. Creativity is secondary to measurable output control.

Step #2: RAG System Design (Whiteboarding)

Senior roles require system design mastery. You will whiteboard a Retrieval-Augmented Generation (RAG) architecture for a critical business use case.

Scenario Example: “Designing an internal AI assistant for our global SDR team to handle real-time prospect data.”

You must fluently discuss the entire pipeline:

- Chunking strategy (recursive vs. fixed size).

- Metadata filtering and pre-retrieval routing (crucial for relevance).

- Post-processing steps (re-ranking, confidence scoring, output validation).

- Security protocols (how do we handle sensitive CRM or lead data?).

If you cannot map the entire query lifecycle,from user input to final, validated LLM response,you fail the technical round. We need architects, not just prompt writers.

Step #3: Cost and Latency Optimization

This step determines your salary bracket. Senior roles demand financial accountability.

Be ready for direct challenge questions like: “How would you reduce the API cost of this specific lead generation workflow by 30% without sacrificing lead quality?”

Your response must focus on measurable efficiency gains:

- Strategic Model Switching: Moving from heavyweight models (like GPT-4o) to fine-tuned smaller models (like Mistral or specialized open-source options) where performance parity exists.

- Aggressive Prompt Compression: Removing every unnecessary token and context layer. Tokens cost money.

- Batch Processing Implementation: Shifting non-real-time queries (e.g., nightly lead enrichment or bulk email personalization) into cost-effective batch runs.

We prioritize efficiency above all. Show us you prioritize our bottom line. That is how you earn top-tier remote pay.

Frequently Asked Questions

1. Is Prompt Engineering a sustainable career in 2026?

Answer: Yes. But the role is actively transforming,right now. Simple prompt writing is dead weight; model improvements and automation handle that now. The sustainable path is Prompt Architecture or Prompt Operations (LLMOps). We are talking about managing infrastructure, establishing testing protocols, and deploying prompts at massive scale. Stop writing. Start managing systems.

2. What is the difference between a Prompt Engineer and an LLM Engineer?

Answer: The distinction is operational leverage.

- LLM Engineer: Focuses on core infrastructure. Think fine-tuning, model training, deployment pipelines, and raw scalability of the model itself.

- Prompt Architect: Focuses purely on the interface layer. This involves designing optimal instruction sets, building robust context retrieval systems (RAG), and creating evaluation frameworks. Their goal: maximizing model performance for specific, measurable business outcomes.

3. Do I need a degree in Computer Science to be a Prompt Engineer?

Answer: For any six-figure role (e.g., Agentic Designer, Prompt Security), a strong technical foundation is mandatory. The degree is secondary. Results are primary.

You need demonstrated proficiency in:

- Python and robust scripting.

- The software development lifecycle (SDLC).

- System architecture design.

A CS, Linguistics, or Data Science degree certainly helps. But equivalent, measurable work experience replaces the degree entirely. Show us the revenue increase your system generated. That is the only metric that matters.

Ready to scale your lead generation with AI?

Pyrsonalize provides the AI Lead Generation Software needed to find clients’ personal emails and scale outreach. Stop guessing. Start converting.

Start Your Free Trial