The Predictive Lead Scoring Architecture: Step-by-Step Deployment

Moving past the hype: A predictive scoring model is only as good as the data you feed it. Many organizations invest six figures in AI tools only to realize their foundational data is unusable.

We approach PLS deployment as a structured, three-phase engineering project. Failure at Phase 1 guarantees failure in the sales funnel.

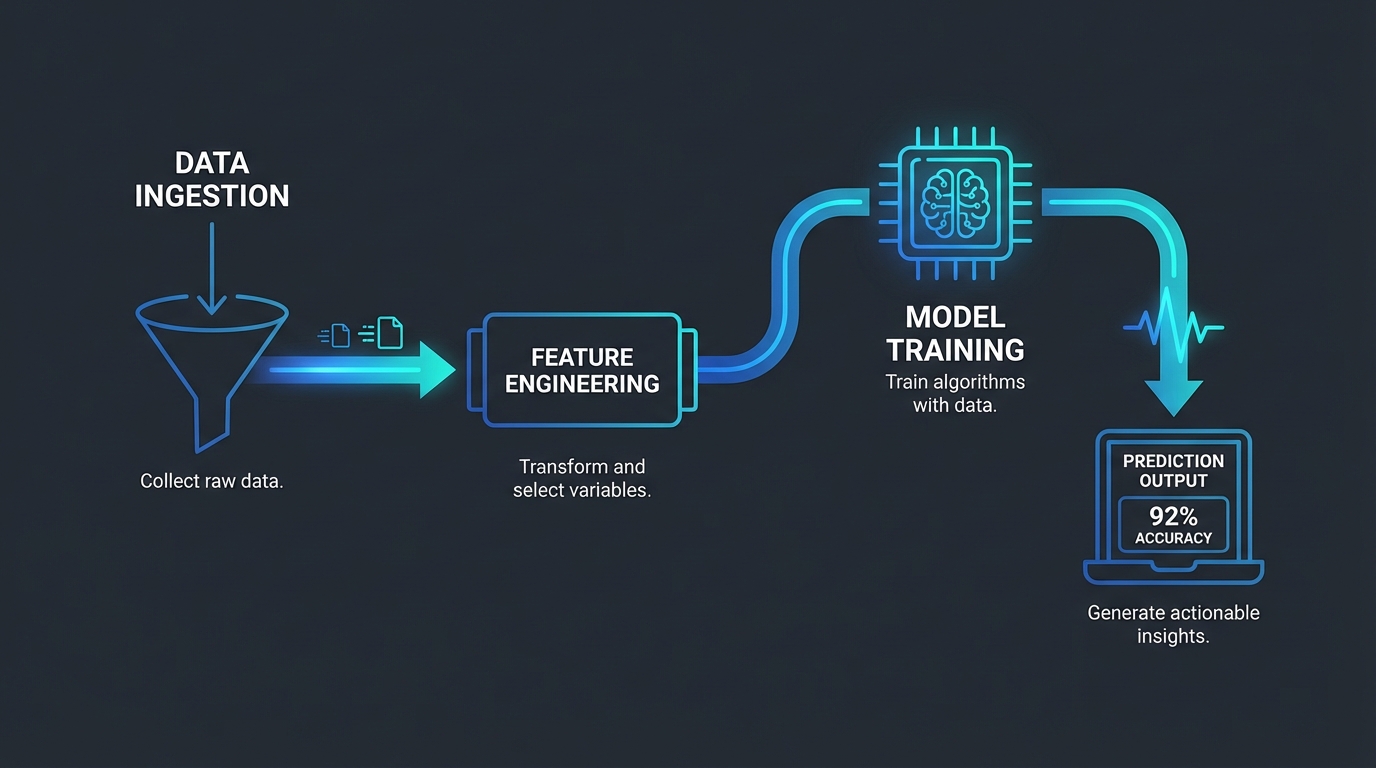

Step #1: Data Preparation and Feature Engineering (The 90% Problem)

This is where 90% of all predictive lead scoring projects stall. You need high-quality, labeled historical data that accurately reflects your successful conversions over the last 12–24 months.

If your CRM data is messy,duplicate entries, missing firmographics, inconsistent activity logging,the model will learn those inconsistencies. Garbage in, garbage out.

A. Data Labeling: Defining Success

The model requires clear labels to learn. You must define what constitutes a positive outcome (1) and a negative outcome (0).

- Positive Label (1): A lead that converted to a paying customer or reached a specific high-value stage (e.g., Opportunity Closed-Won).

- Negative Label (0): A lead that went cold, unsubscribed, or was disqualified by an SDR after initial contact (Opportunity Closed-Lost).

The quality of this labeling is paramount. If you label a lead that converted after 18 months as ‘0’ because they initially stalled, your model will wrongly penalize similar high-potential leads.

B. Feature Engineering: The Predictive Inputs

Feature Engineering is the process of transforming raw data into predictive variables (features) that the algorithm can process. This is the difference between a generic model and a high-performing system.

We categorize features into three critical buckets:

| Feature Category | Definition | Example Features |

|---|---|---|

| Firmographic/Demographic | Static attributes of the lead or company. | Industry vertical, company size (employees), annual revenue, job title seniority (C-Suite vs. Manager), geographic region. |

| Behavioral/Engagement | Observed actions across digital channels. | Website visits (high-intent pages like Pricing or Demo), content downloads (whitepapers), email opens, specific product usage (for freemium models), time spent on site. |

| Temporal/Recency | The timing and velocity of engagement. | Time since last interaction (Recency), frequency of engagement in the last 30 days, total time elapsed since initial lead creation. |

Strategic Insight: The most powerful feature is often Intent Velocity,how quickly a prospect moves through high-value steps. The model must weigh rapid progression far more heavily than stagnant, passive engagement.

Step #2: Algorithm Selection (The Engine Room)

When building a PLS model, we are dealing with a binary classification problem: Will the lead convert (1) or not (0)?

While Deep Learning (Neural Networks) is powerful, it lacks interpretability. SDRs need to know *why* a lead received a high score. Therefore, we rely on established, robust, and easily auditable models.

A. Logistic Regression (The Baseline)

Logistic Regression is the foundational algorithm for classification. It outputs a probability score (between 0 and 1) that the lead belongs to the positive class (conversion).

- Pros: Highly interpretable (you can see the weight assigned to each feature), fast to train, excellent starting point.

- Cons: Assumes linear relationships between features, which often doesn’t capture complex buyer journeys.

B. Random Forests (The Powerhouse)

A Random Forest model operates by building hundreds of independent decision trees and aggregating their results. This handles the complex, non-linear interactions inherent in modern sales cycles.

- Pros: Exceptional accuracy, handles outliers well, automatically detects feature importance, and minimizes overfitting.

- Cons: Less directly interpretable than Logistic Regression (though modern tools provide feature importance scores).

Our Recommendation: Start with Logistic Regression to establish a baseline. Then, deploy a Random Forest model. The Random Forest will almost always provide superior predictive power, allowing you to prioritize outreach with higher confidence.

Start Finding High-Intent Leads with Pyrsonalize AIThe Failure of Static Scoring: Why AI Wins

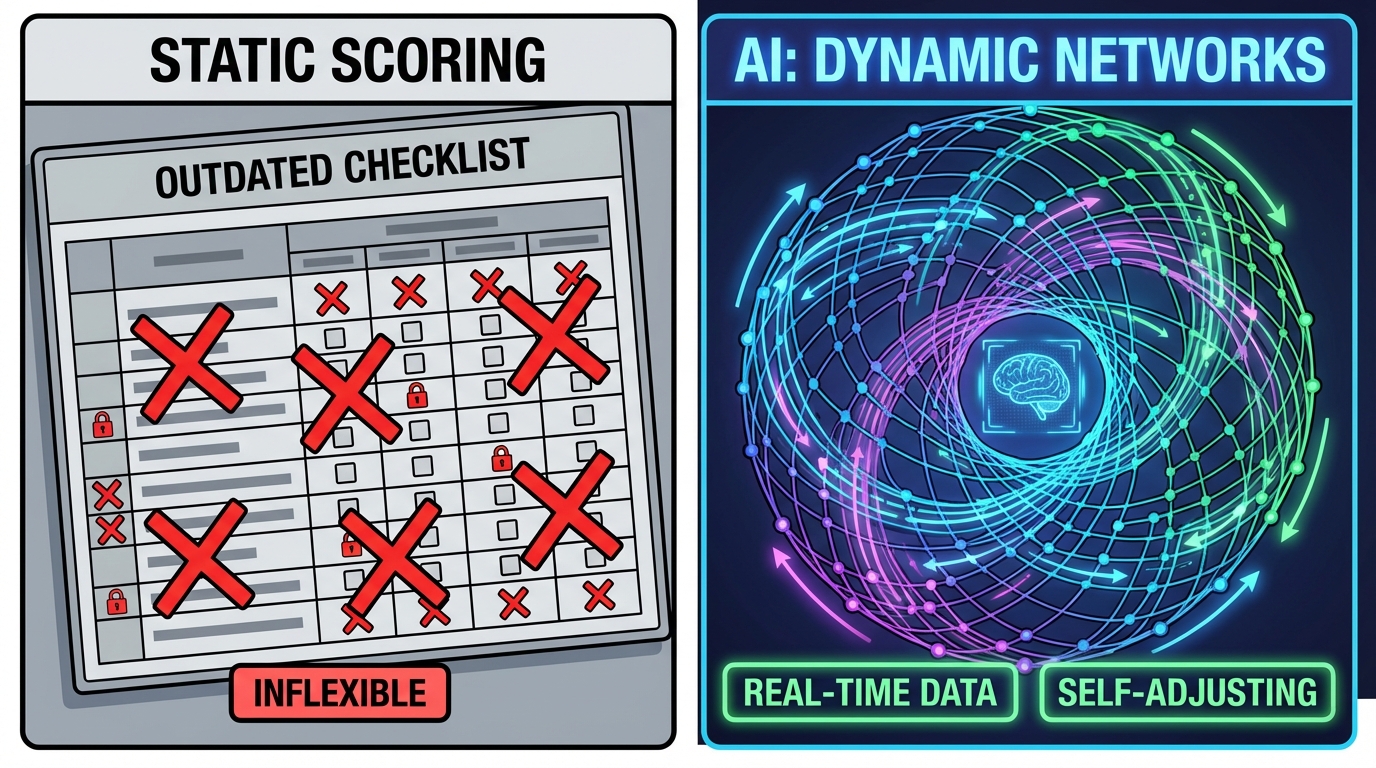

Static scoring relies on one flawed premise: That the buyer journey is linear. It is not.

This legacy system,set rules, assign points, hope for the best,worked in 2010. Today, it fails catastrophically because the modern B2B funnel is non-linear and complex.

The core failure of traditional models is mistaking correlation for causation.

We assume a VP of Marketing from a 500-person company visiting the pricing page is a high-intent signal. Our internal data shows this is often a false positive (competitive research, early-stage exploration).

Simultaneously, static rules miss the *true* high-intent signals entirely. A low-score lead might be a perfect-fit persona who only sent one targeted email,a signal that only predictive analysis can weight correctly.

Predictive scoring flips the script entirely. It uses your historical database,thousands of converted customers vs. thousands of lost opportunities,to identify the true conversion DNA.

It does not guess. It calculates probability.

Strategic Shift: Manual vs. AI Predictive Scoring

Before you commit resources to an AI deployment, you must internalize this shift. The difference between legacy systems and AI is not incremental; it is foundational, impacting everything from SDR allocation to pipeline forecasting.

Here is the strategic comparison:

| Feature | Manual/Static Scoring | Predictive/AI Scoring |

|---|---|---|

| Data Volume Handled | Low (5-15 predefined variables). | High (100s or 1000s of variables, including unstructured data). |

| Adaptability | Static. Requires manual, time-consuming updates (monthly/quarterly). | Dynamic. Automatically recalibrates weights based on new conversion data and market shifts. |

| Bias Introduction | High (Subjective criteria set by S&M teams leads to human bias). | Low (Bias is only introduced via flawed or incomplete training data). |

| Output | Simple Score (e.g., 85/100) or Grade (A-D). | Conversion Probability (e.g., 87.5% likelihood to convert) and Key Trait Drivers. |

| Insight Provided | Correlation (This lead did X, Y, Z). | Causation (This lead possesses traits common to 90% of our closed-won deals). |

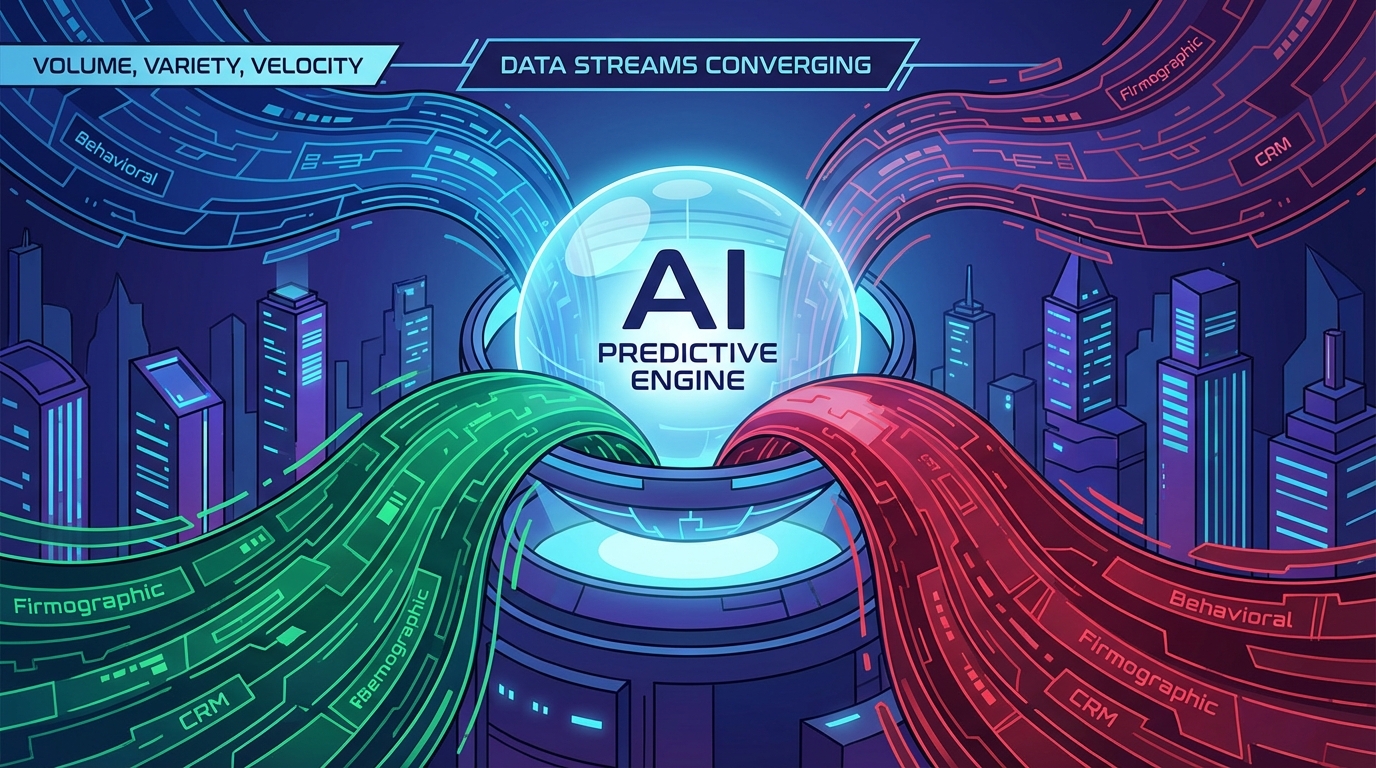

Core Data Streams: Fueling the Predictive Engine

The predictive engine demands high-octane fuel. Garbage in equals useless scores,that remains the universal truth of AI modeling.

Your model’s accuracy hinges entirely on the quality and diversity of the input data. We rely on four non-negotiable data streams to construct a robust Predictive Lead Scoring (PLS) architecture.

- Firmographic Data (The Fit): This defines the fundamental company context. It confirms market viability.

- Industry, size, revenue, and geographical location.

- Technology stack (technographic data),Are they already using complementary or competing systems?

- Recent funding rounds or critical news announcements (signals of growth or pain).

This answers: Does this company structurally need our solution? We leverage this data to define the foundational fit,the Targeted Buyer Persona (TBP). If the company doesn’t fit the TBP, the highest score is irrelevant.

- Demographic Data (The Role): This defines the individual contact’s influence and authority.

- Job title and seniority level (VP vs. Manager).

- Decision-making authority (DM) or influence point status.

- Department and reporting structure (Do they have budget control?).

This answers: Is this person the right influence point? We must confirm they possess the power to move the deal forward.

- Behavioral Data (The Interest): This tracks interaction velocity and engagement with your owned assets.

- Website visits, specific page views, and time on site (intensity).

- Content downloads (e.g., ROI calculators, technical specs, pricing pages).

- Email opens and clicks (responsiveness).

- Repeated interactions over a short window (velocity signal).

This answers: How interested is this prospect right now? Crucially: We must map this individual activity back to the account level. This requires dedicated IP tracking tools; otherwise, the data is siloed noise. Mapping is critical: Best Software That Tracks Website Visitor IP Address for Leads is how we achieve this account-based mapping.

- Intent Data (The Timing): This is the external signal of immediate purchase readiness.

- Third-party data showing research on competitor products or solutions.

- Surge in topic consumption related to your solution category (high-volume searches).

- Engagement with review sites or industry forums related to buying cycles.

This answers: Is this prospect actively looking to buy in the next 90 days? This data stream provides the necessary context for sales urgency. It is the difference between an interested lead (Behavioral) and a lead actively shopping right now (Intent).

Under the Hood: The Core ML Algorithms

We discussed the fuel (data). Now, we must analyze the engine. The algorithms are what transform raw data streams into measurable, actionable revenue predictions.

When engineering Predictive Lead Scoring (PLS), we are dealing with a fundamental classification problem. The model is trained to answer one binary question:

- Will this lead convert? (1)

- Will this lead churn/stall? (0)

The resulting probability score (e.g., 92.5%) is the model’s calculated confidence level in that classification. To achieve scalable accuracy, we rely on a strategic combination of foundational and advanced models.

The Foundational Classifier: Logistic Regression

Logistic Regression (LR) is the baseline algorithm for any binary classification task. It’s the essential starting point for two critical reasons.

LR estimates the probability of conversion by fitting data to a simple logistic function, restricting the output cleanly between 0 and 1.

Why Pyrsonalize Starts With LR:

- Transparency: It is highly interpretable. LR coefficients immediately tell us which variables (e.g., company size, specific page visits) have the strongest linear relationship with conversion.

- Speed & Efficiency: It trains quickly, allowing us to establish a rapid baseline performance metric against which all future, complex models are measured.

- Feature Selection: It effectively identifies the primary, most influential factors, helping us prune unnecessary or redundant data streams before moving to resource-intensive models.

Handling Non-Linearity: Ensemble Methods

The reality of buyer behavior is rarely linear. Leads don’t follow simple A+B=C paths. They jump, stall, and double back. This is where advanced ensemble algorithms step in to capture complexity.

Random Forests (RF)

Random Forests is an ensemble technique that builds hundreds of individual decision trees during training. It then aggregates the output, using the “mode” of all trees as the final classification.

RF is essential for real-world sales data:

- Non-Linearity: It excels at identifying complex, non-linear interactions. For example: “Leads who download Resource X, but only if they are in Industry Y and visit the pricing page three times, convert at 95%.”

- Robustness: RF handles massive, high-dimensional data (hundreds of variables) without easily overfitting the model.

Gradient Boosting Machines (GBM)

Gradient Boosting Machines (GBM),and their optimized variants like XGBoost,are the power hitters of predictive modeling. They are designed for maximum accuracy.

GBM builds trees sequentially. Crucially, each new tree focuses specifically on correcting the errors and misclassifications made by the previous trees. This iterative error correction drives performance to the highest possible level.

The Trade-Off: While GBM often yields the highest predictive accuracy, it requires meticulous tuning and is less transparent than LR. We deploy GBM only when maximum performance is required in production, ensuring we have robust validation to prevent overfitting.

Our Strategic Algorithm Deployment

We do not rely on a single algorithm. Our architecture uses a structured, two-phase approach:

- Phase 1 (Baseline): Start with Logistic Regression. Establish core feature importance and a measurable performance baseline.

- Phase 2 (Production): Deploy an advanced Ensemble Model (RF or XGBoost). This guarantees maximum predictive accuracy in a live production environment, ensuring your sales team is acting on the most reliable data possible.

This layered approach ensures both high interpretability and peak operational performance,a non-negotiable standard for driving measurable revenue increase.

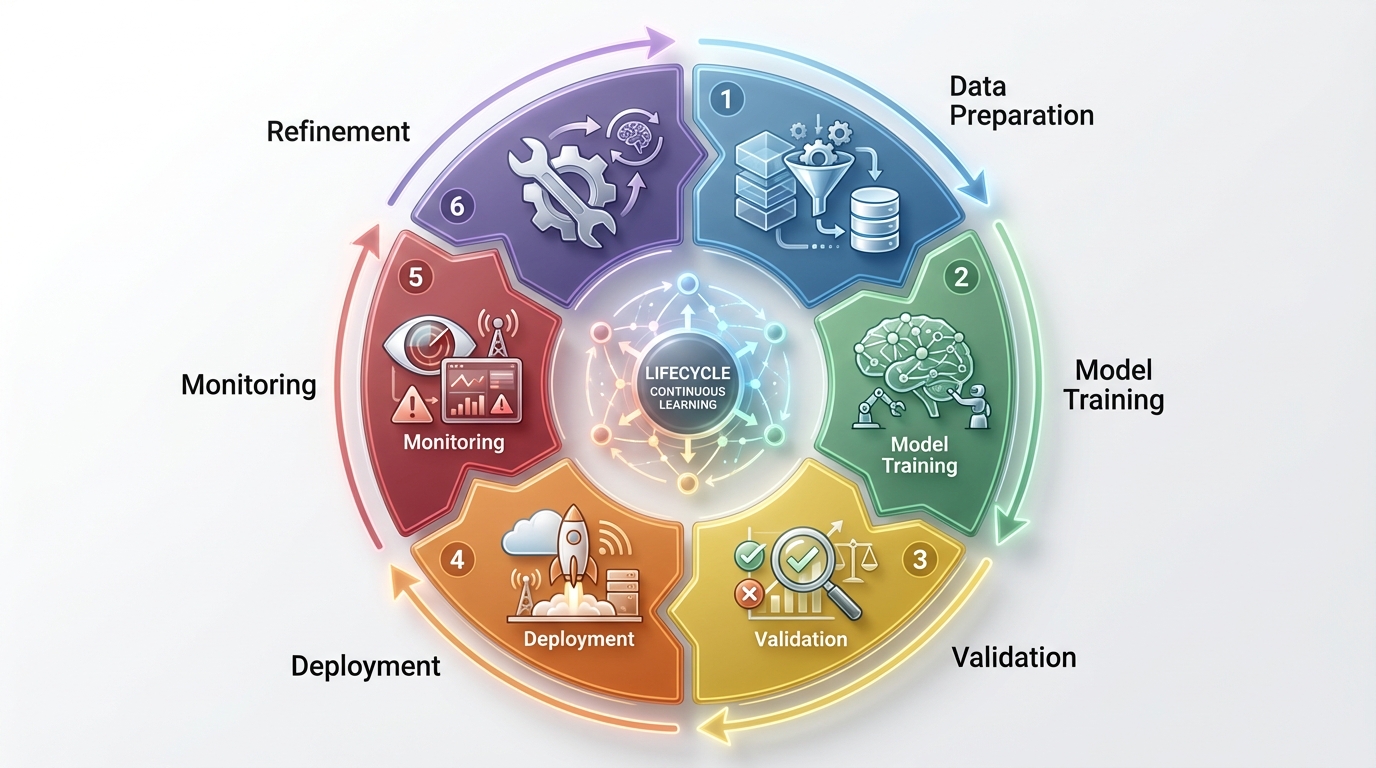

The 6-Step Predictive Model Lifecycle

Building a Predictive Lead Scoring (PLS) model is never a one-time project. It is a continuous, closed-loop RevOps system.

This is the precise, step-by-step blueprint our data science teams use to guarantee measurable lift in lead conversion rates.

Step #1: Feature Engineering and Data Preparation

This is the single most critical step. Garbage in means guaranteed model failure.

We take raw data (CRM records, website logs, email activity) and transform it into meaningful features the ML model can actually interpret.

- Data Cleaning: Mandatory removal of duplicates, handling missing values, and standardization. (Non-negotiable data hygiene.)

- Feature Creation: Raw data transformation is mandatory. Instead of tracking a simple “number of page visits,” we engineer high-value features like “average time spent on high-intent pages in the last 30 days.” This extracts genuine predictive power.

- Data Labeling: Define the target variable with absolute clarity. A lead is labeled ‘1’ (Converted) only after reaching a specific, measurable milestone (e.g., Closed-Won deal or SQL stage within 90 days).

Step #2: Training and Validation

We strategically split the labeled data into three sets: Training (70%), Validation (15%), and Test (15%).

The model learns patterns from the Training set. We use the Validation set exclusively to tune hyperparameters and select the top-performing algorithm.

Crucial Insight: Training data must reflect current market reality. Training a PLS model on data older than 24 months introduces significant, measurable bias. Your predictions will fail.

Step #3: Model Evaluation (Metrics Selection)

Before deployment, we evaluate the model’s performance on the unseen Test set.

We immediately discard general accuracy metrics. (They tell you nothing about business cost.) We use specialized metrics that directly reflect the cost of false positives (wasting SDR time) and false negatives (missing revenue opportunities). (We detail Precision and Recall in the next section.)

Step #4: Deployment and Scoring

Once validated, the model is deployed. This is typically done via a secure API or integrated directly into your CRM/MAP platform (e.g., Salesforce, HubSpot).

New inbound leads must be scored instantly. The score is immediately visible to the SDR team, triggering automated, high-priority workflows based on defined score thresholds.

Step #5: Monitoring for Degradation

Deployment is not the finish line. It is the starting gun for continuous monitoring. We must aggressively watch for two primary issues that kill model performance:

- Data Drift: When the characteristics of the incoming live data change significantly from the original training data. This happens when the market shifts, competitors emerge, or a new product is launched.

- Concept Drift: When the relationship between the input variables and the target variable changes. The definition of a quality lead has changed. What made a lead convert six months ago no longer holds true today.

Step #6: Continuous Retraining Loop

To successfully combat drift, we establish an automated retraining schedule.

Every quarter,or more frequently, whenever performance metrics drop below a defined, critical threshold,the model is automatically retrained on the most recent, labeled data. This ensures the PLS system remains dynamic, accurate, and directly tied to current revenue outcomes.

Measuring Success: Precision, Recall, and ROI

The selection of your core metric is not technical; it is a strategic mandate.

It defines your entire sales motion.

Do you prioritize raw lead volume? You optimize for one metric. Do you prioritize SDR efficiency and predictable revenue? You optimize for another.

At Pyrsonalize, we prioritize efficiency and operational leverage. We focus on Precision.

The Strategic Cost of Error: False Positives vs. False Negatives

Every prediction our models make falls into one of four categories. Understanding this matrix dictates where we set the lead scoring threshold:

- True Positive (TP): Model predicts conversion (1), lead converts (1). (The ideal, high-value result.)

- True Negative (TN): Model predicts non-conversion (0), lead does not convert (0). (Time saved for the SDR team.)

- False Positive (FP / Type I Error): Model predicts conversion (1), lead fails to convert (0). (The greatest efficiency killer: Wasted SDR time.)

- False Negative (FN / Type II Error): Model predicts non-conversion (0), lead converts anyway (1). (A potential missed opportunity, but generally less costly than FP.)

For any high-velocity sales organization, the greatest operational drain is the False Positive.

This is the “hot” lead that the model scored a 90, but ultimately wastes two hours of high-value SDR time before being disqualified. These false signals kill momentum and revenue predictability.

This core strategic reality dictates our focus:

Precision vs. Recall: The Strategic Trade-Off

- Precision: Measures minimizing False Positives. (Out of all leads predicted to convert, how many actually did?) High Precision ensures high SDR efficiency.

- Recall: Measures minimizing False Negatives. (Out of all leads that *should* convert, how many did the model correctly identify?) High Recall ensures high volume capture.

Our Mandate: We tune our Predictive Lead Scoring models to maximize Precision (minimizing wasted effort). We are explicitly willing to accept a slightly lower Recall score if it guarantees that our SDRs spend 100% of their time on high-probability leads.

Wasting time on false signals kills conversion rates and destroys SDR Agent KPIs: The 2025 Blueprint for Predictable Revenue.

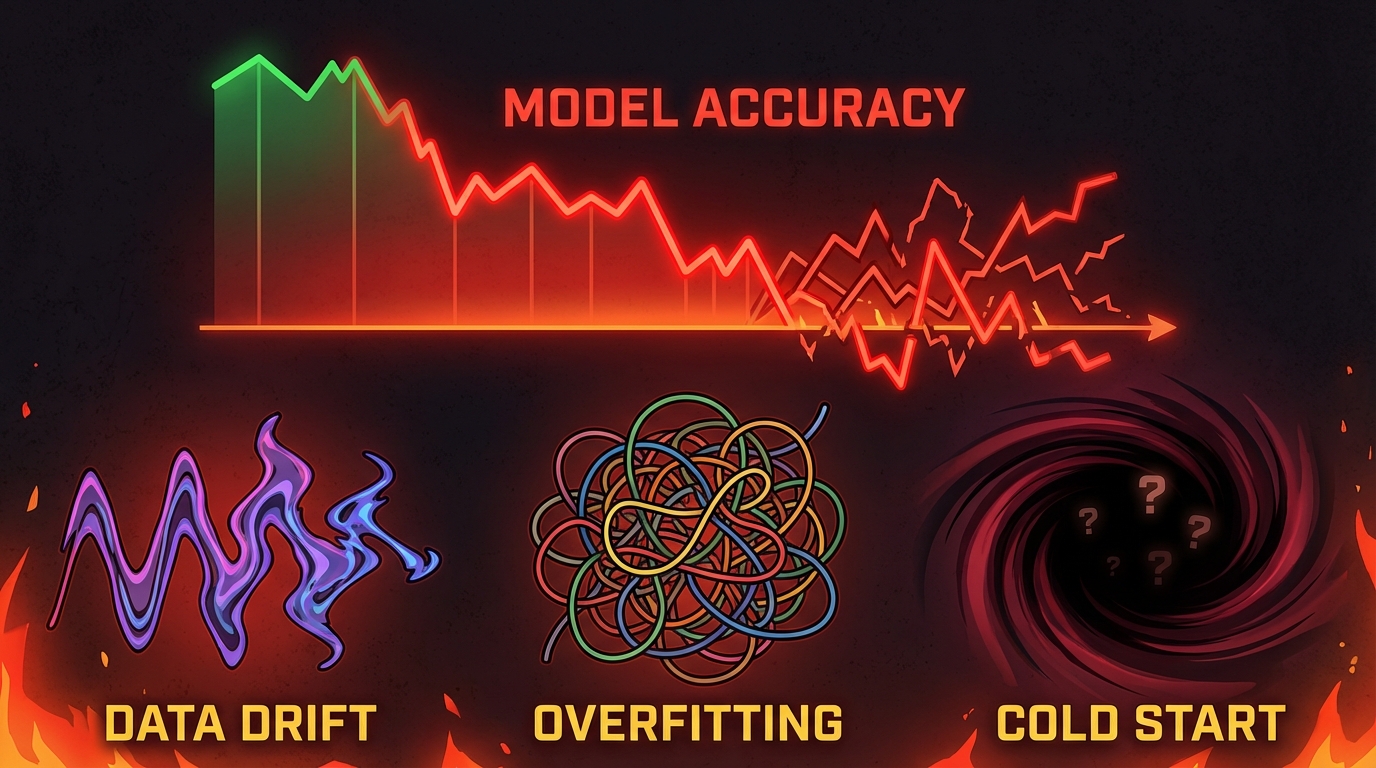

The Advanced Pitfalls: Why Your Predictive Model Will Fail (Data Drift, Overfitting, and Cold Starts)

We prioritize efficiency. But efficiency is fragile.

Even the best predictive models,the ones driving massive increases in sales velocity,will eventually degrade. Why? They fail when they become critically detached from reality.

Here are the three advanced challenges you must proactively manage to maintain high precision.

The Cold Start Problem: When Data is Insufficient

New companies, or those just migrating from manual scoring, face the Cold Start Problem.

A high-functioning Predictive Lead Scoring (PLS) model is a data-hungry beast. It requires thousands of historical data points (labeled conversions and non-conversions) to train effectively.

If you are a startup with only 50 closed-won deals, your model is statistically inaccurate. It is basing its predictions on noise, not signal.

Mitigation: Our Strategy for New Systems

- Start Explicitly: Rely on explicit, rule-based scoring (e.g., Firmographic requirements, budget size) until you accumulate sufficient proprietary data. We set the minimum threshold at 100 successful conversions before trusting an AI model.

- Leverage Transfer Learning: Use third-party data providers (like Pyrsonalize) who train models on industry-wide benchmarks. We then fine-tune that robust model using your limited proprietary data. This is how you bypass the cold start without waiting 18 months.

Overfitting: The Fatal Flaw of Memorization

Overfitting is often misunderstood. It occurs when the model learns the noise and specifics of the training data too well. It essentially becomes a historian, not a predictor.

The result: It performs perfectly on your historical data (the training set) but fails miserably on new, unseen leads entering the funnel today.

The model is memorizing the past instead of learning generalizable, scalable patterns.

Mitigation: Building Robustness

- Cross-Validation is Mandatory: We always use techniques like K-Fold Cross-Validation during training. This forces the model to prove its accuracy across multiple data subsets, preventing it from relying on idiosyncrasies.

- Introduce Regularization: Simplify the model. We introduce mathematical regularization parameters (L1 or L2) to penalize overly complex features. This forces the model to focus only on the most significant predictors.

- The Crucial Hold-Out: Always test against a completely held-out validation set,data the model has never seen. If the model scores 95% on training data but 60% on validation data, you have a severe overfitting problem.

Data Drift: The Silent Killer of Revenue

Data drift is the single biggest reason why a model that was 90% precise last year is only 60% precise today. It signifies that the underlying reality of your buyer profile has changed, but your model’s assumptions have not.

Example: In 2022, “downloading the executive whitepaper” was a high-intent signal, indicating a serious prospect. In 2025, due to massive content saturation, that signal might be weak or even useless. If your model still weights whitepaper downloads highly, it has drifted, and it is actively wasting your SDR team’s time.

This drift kills precision and operational leverage.

Mitigation: Continuous Monitoring and Retraining

- Automated Alert Systems: Implement alerts that flag significant shifts in input feature distributions. If the average company size of inbound leads suddenly drops by 50%, your model needs immediate review.

- Enforce the Retraining Loop: You cannot deploy a model and forget it. We enforce a strict, quarterly retraining loop (or whenever drift is detected). This forces the model to relearn the current market reality using the most recent data.

Operationalizing the PLS: Integration and Alignment

A Predictive Lead Score (PLS) means nothing if your SDR team ignores it.

We built the model to convert, not just to calculate. Integration must be seamless. The score must drive immediate, measurable action inside your existing workflow.

Step #1: CRM Integration is Non-Negotiable

The PLS score is your single source of truth. It must reside inside your CRM,Salesforce, HubSpot, Pipedrive. It cannot be hidden in a spreadsheet or a third-party dashboard.

The score must be a primary, sortable field for every Sales Development Representative (SDR). This is how we enforce prioritization.

Operationalizing the Score: Automated Routing Rules

We use the PLS score to dictate lead routing, ensuring speed-to-lead is maximized for the most valuable prospects. This requires zero human intervention.

- High Priority (90+): Instant routing to our top 1% SDRs. We enforce a 10-minute Speed-to-Lead SLA for these accounts. This is where revenue is made.

- Medium Priority (60-89): Automatic injection into a personalized, aggressive nurturing sequence. The model flagged them as qualified, but not yet ready to buy. Aggressive follow-up is mandatory here.

- Low Priority (Below 60): Route directly back to Marketing Automation for long-term, low-touch education. They are not worth an SDR’s time right now.

Step #2: Strategic Alignment Through Objective Data

Predictive scoring doesn’t just improve efficiency. It ends the eternal, unproductive war between Sales and Marketing.

Why? It provides an objective, data-driven definition of quality.

Marketing can no longer hide behind vanity metrics. If the leads they generate consistently score below 70, they are failing to deliver quality, regardless of MQL volume.

The PLS score becomes the single shared KPI (Key Performance Indicator). Both teams are responsible for optimizing the inputs:

- Marketing: Optimizes volume and input quality (e.g., better targeting, clearer ad copy).

- Sales: Provides rapid conversion feedback and execution data (e.g., why a 95-score lead didn’t close).

We eliminated internal arguments about ‘lead quality’ the day we implemented PLS. The score is objective. If Marketing delivers leads scoring 95+, Sales is contractually obligated to close them. If they fail, the problem is sales execution, not the lead source. The model shifts the blame,and the focus,to measurable outcomes.

Ready to shift your focus from MQL arguments to measurable revenue? Start Your Free Trial and find the clients your model predicts.

AI Lead Scoring: Essential Strategy FAQs

- What is the difference between PLS and MQL scoring?

- This is the core distinction between outdated sales methods and modern AI deployment. MQL (Marketing Qualified Lead) scoring is static and subjective. It operates on predefined, manual rules (e.g., 5 points for visiting the pricing page). This approach is prone to human bias and quickly becomes irrelevant.

- PLS (Predictive Lead Scoring) is dynamic. It uses machine learning to analyze thousands of data points,behavioral, firmographic, and historical,simultaneously. The output is a real-time conversion probability based on our historical success patterns. PLS is objective and future-focused; MQL scoring is manual and stagnant.

- How much historical data do I need to train a reliable model?

- We need high-quality data to avoid the Cold Start Problem entirely. While simple models can technically start with less, we don’t recommend relying on them. For true reliability,and to leverage advanced methods like Gradient Boosting,you need a robust foundation.

- We strongly recommend a minimum dataset of 100 to 200 labeled ‘Closed-Won’ deals and an equal number of ‘Closed-Lost’ opportunities. This volume ensures the model can generalize patterns effectively across your Ideal Customer Profile (ICP).

- Should I prioritize Precision or Recall in my PLS model?

- This is a strategic decision that directly impacts SDR productivity. For most high-ticket B2B sales organizations focused on efficiency, you must prioritize Precision.

-

- High Precision means fewer False Positives. Your SDRs spend their limited time only on leads that the model is highly certain will convert. We prioritize quality over pipeline volume.

- Prioritizing Recall increases the total number of leads scored. However, this often floods your pipeline with low-quality prospects, risking significant wasted sales time and lower team morale.

Stop guessing. Start converting.

Use AI to find your clients’ personal emails and accelerate your outreach strategy.

Start Your Free Trial

Click Here to Get StartedReferences

- AI Lead Scoring: Definition, Benefits & How it Works (2025 Guide)

- What Is Predictive Lead Scoring and How It Improves … – Persana AI

- Beginner’s Guide to Predictive Lead Scoring in 2025 – Coefficient

- Ultimate Guide to Predictive Lead Scoring – SalesMind AI

- Predictive Lead Scoring + AI is a Game Changer – Salesforce